Human Sensing Using Visible Light Communication

Abstract

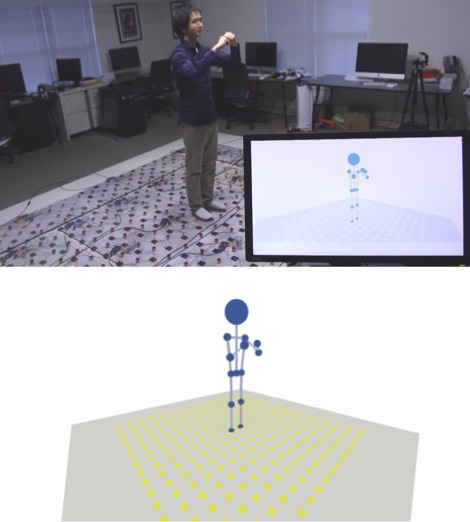

We present LiSense, the first-of-its-kind system enabling both data communication and fine-grained, real-time human skeleton reconstruction using Visible Light Communication (VLC). LiSense uses shadows created by the human body from blocked light and reconstructs 3D human skeleton postures in real time. We overcome two key challenges to realize shadow-based human sensing. First, multiple lights on the ceiling lead to diminished and complex shadow patterns on the floor. We design light beacons enabled by VLC to separate light rays from different light sources and recover the shadow pattern cast by each individual light. Second, we design an efficient inference algorithm to reconstruct user postures using 2D shadow information with a limited resolution collected by photodiodes embedded in the floor. We build a 3 m x 3 m LiSense testbed using off-the-shelf LEDs and photodiodes. Experiments show that LiSense reconstructs the 3D user skeleton at 60 Hz in real time with 10° mean angular error for five body joints.

Links

Video

Publications

| Tianxing Li, Chuankai An, Zhao Tian, Andrew T. Campbell, and Xia Zhou. Human Sensing Using Visible Light Communication, ACM Conference on Mobile Computing and Networking (MobiCom), September, 2015, Paris, France. Best Video Award. [PDF] |

| Xia Zhou and Andrew T. Campbell. Visible Light Networking and Sensing, The 1st ACM Workshop on Hot Topics in Wireless (HotWireless), September, 2014, Maui, Hawaii. [PDF] |

Acknowledgment

We sincerely thank anonymous reviewers for their valuable comments. We also thank Guanyang Yu and Xiaole An for their contribution on visualizing the user skeleton, Xing-Dong Yang for the support on Kinect experiments, Will Campbell and DartNets Lab members Fanglin Chen, Peilin Hao, and Rui Wang for their help on our experiments. We specially thank Amy Y. Zhang '17 and Lorie Loeb for helping produce the demo video, Roy Prochorchik and Ronald Peterson for the insightful suggestions and help on engineering the glass top of the testbed. This work is supported in part by the National Science Foundation under grant CNS-1421528. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect those of the funding agencies or others.

Press

- Biophotonics: Smart Light Captures Body in Motion

- gizmag: Smart light lets you control your environment

- Phys.Org: Team uses smart light, shadows to track human posture

- ScienceDaily: Smart light, shadows used to track human posture

- EurekAlert!: Dartmouth team uses smart light, shadows to track human posture

Copyright